Have you ever been fascinated by how computers understand language? Pre-trained language models like GPT and BERT are at the forefront of natural language processing, enabling computers to analyze, understand and generate language with remarkable accuracy. But which one is more acceptable? In this article, we will analyze GPT vs BERT.

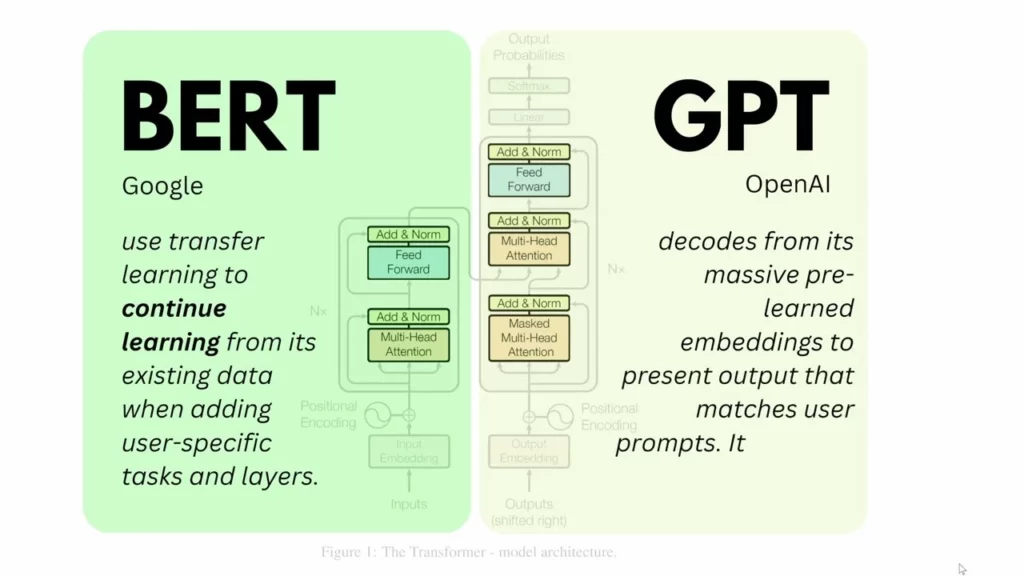

GPT, is a language model devised by OpenAI that is designed to generate text. GPT uses a deep learning technique known as unsupervised learning to analyze vast amounts of text data and learn how to generate new text, and do some really cool things. BERT is another pre-trained language model designed by Google. BERT uses a similar unsupervised learning technique as GPT, but it is designed to understand language rather than generate it.

In this article, we will plunge deep into GPT vs BERT, exploring their unique strengths and weaknesses. Whether you are an NLP practitioner or simply interested in how computers understand language, this guide will provide you with a comprehensive understanding of these two powerful language models.

In This Article

What is GPT?

Generative Pre-trained Transformer (GPT) is a powerful language model that uses deep learning to analyze vast amounts of text data and learn how to generate new text. Its capacity to forge human-like text has the prospect of revolutionizing everything from chatbots to automated content creation, and its impact on the field of natural language processing cannot be overstated.

With GPT, computers can understand and generate language by providing prompts at a level that was previously unimaginable, opening up exciting new possibilities for communication and collaboration.

What is BERT?

BERT, or Bidirectional Encoder Representations from Transformers, is one of the most exciting developments in the world of natural language processing. It’s the technology behind the chatbots and virtual assistants we interact with on a daily basis, and it’s changing the way we communicate with machines.

What sets BERT apart from other language models is its capability to comprehend the context and purpose of words based on their surrounding words. This means that it can analyze language in a bidirectional manner, which is how humans naturally process language. It’s like having a virtual language assistant that can interpret and respond to our requests with incredible accuracy.

Differences Between GPT and BERT

In this saga of GPT Vs BERT, let us see the differences between the two. When it comes to language models, GPT and BERT are two heavyweights that are often compared. So, what are the distinctions between the two?

Well, one key difference lies in their approach to language processing. GPT follows an autoregressive approach, which means it only considers the left context when generating text. BERT, on the other hand, takes a bidirectional approach and considers both the left and right context. This means that BERT is ably served for tasks that require understanding the full context of a sentence or phrase, such as emotion analysis or natural language understanding.

Another difference lies in their training datasets. Both models were trained on large datasets of text data, such as Wikipedia and books. However, GPT-3 was trained on a whopping 45TB of data, while BERT was trained on a 3TB dataset. This means that GPT-3 has a permit to significantly more knowledge than BERT, which can give it an advantage in certain duties such as summarization or translation.

Finally, there’s the difference in size. Both models are large, but GPT-3 is much larger than BERT due to its extensive training dataset. GPT-3 has a whopping 1.5 billion parameters, while BERT has 340 million parameters.

So, while both models have their strengths and weaknesses, it’s clear that GPT and BERT have different approaches to language processing and are better suited for different tasks.

Similarities Between GPT and BERT

GPT Vs BERT is not all about differences, but similarities too. While there are certainly differences between GPT and BERT, there are also some similarities worth noting. For example, both models are trained using unsupervised knowledge techniques, meaning they comprehend vast portions of text data without the need for straightforward labeling or supervision.

Furthermore, it has been demonstrated that both GPT and BERT are exceptionally efficient language models, attaining cutting-edge outcomes on various natural language processing assignments. Both models have been used in various applications, from chatbots to language translation to sentiment analysis, and have been instrumental in advancing the field of natural language processing.

Another similarity between GPT and BERT is that they are both pre-trained language models that can be fine-tuned for specific tasks. This means that developers can take advantage of the models’ pre-existing knowledge of the language and further train them on specific datasets to achieve even better performance for their particular application.

In short, while GPT Vs BERT may have different approaches to language processing, they share a common foundation of unsupervised learning, impressive performance, and flexibility for fine-tuning specific tasks.

GPT Use Cases

In this GPT Vs BERT article, let us know about its use cases. GPT (Generative Pre-trained Transformer) by OpenAI has proven to be a versatile language model with numerous potential use cases in various industries. Here are some examples:

1. Text Generation: One of the primary use cases of GPT is text generation. It is capable of producing text that resembles human writing in diverse formats, including article writing, poetry generation, and song composition.

2. Chatbots: By utilizing GPT, it is possible to develop chatbots that are remarkably sophisticated and produce dialogue that sounds natural, allowing them to engage with users in a manner that resembles human conversation.

3. Summarization: In addition, GPT has the potential to condense lengthy textual content into smaller, more digestible segments of data, which may prove beneficial for purposes such as generating content, conducting research, or composing news articles.

4. Translation: GPT can be fine-tuned for specific languages and used for machine translation between languages.

5. Sentiment Analysis: GPT has the ability to scrutinize text and evaluate its emotional connotation, categorizing it as either positive, negative, or neutral. This feature can be advantageous for monitoring customer feedback or analyzing social media posts.

6. Content Creation: GPT can be used to automatically generate content such as product descriptions, emails, or social media posts.

Overall, The field of natural language processing has the potential to be transformed by GPT, as it has already demonstrated impressive levels of performance across a wide range of tasks. With the ongoing advancements in technology, it is anticipated that there will be a proliferation of even more innovative use cases in the future.

BERT Use Cases

Let us know about Bert Use cases in this GPT Vs BERT article. BERT (Bidirectional Encoder Representations from Transformers) has been confirmed to be a strong language model with a wide range of potential use cases. Here are some examples:

1. Question-Answering: BERT has been used to create highly accurate question-answering systems that can answer complex questions with precision.

2. Sentiment Analysis: BERT can be used to examine the belief of text, just like GPT. However, BERT’s bidirectional architecture makes it better suited for understanding the context of the text, making it highly effective for sentiment analysis.

3. Text Classification: BERT can be used for text classification tasks such as spam filtering, content moderation, or identifying fake news.

4. Language Translation: BERT can also be fine-tuned for specific languages and used for machine translation between languages.

5. Named Entity Recognition: BERT has shown impressive performance in named entity recognition, which involves identifying entities such as people, organizations, or locations in a given text.

6. Chatbots: BERT can be used to create Chatbots that possess the capability to comprehend the conversational context and formulate a response that mimics human language and behavior.

Overall, BERT has demonstrated impressive performance on a range of natural language processing tasks and has the potential to be used in various industries, from healthcare to finance to marketing. As the technology persists in advancing, we can hope to see even more creative use cases emerge.

Wrapping Up

In conclusion, GPT and BERT are two powerful language models that have transformed the field of natural language processing. While they have some similarities, they also have some key differences in their architecture, training datasets, and use cases.

Both models have been used in a range of industries, from healthcare to marketing to finance, and have demonstrated impressive performance on a range of natural language processing tasks. Ultimately, the choice between GPT Vs BERT will depend on the specific use case and the type of task that needs to be performed. However, both models have the potential to revolutionize the field of natural language processing and will continue to be at the forefront of this rapidly evolving field.

Hope this article gave you an insight into GPT vs BERT!

Frequently Asked Questions

1. What is the major distinction between GPT and BERT?

The main difference between GPT and BERT is their architecture. GPT is an autoregressive model, while BERT is a bidirectional model.

2. What does it mean for GPT to be autoregressive?

Being autoregressive means that GPT can generate text one word at a time based on the previously generated words.

3. What are the benefits of BERT’s bidirectional architecture?

Due to BERT’s bidirectional architecture, it is able to consider both left and right context when making predictions, allowing it to comprehend the complete context of a sentence or phrase more effectively.

4. How were GPT and BERT trained?

Both models were trained on large datasets of text data from sources like Wikipedia and books. GPT was trained on 45TB of data, while BERT was trained on 3TB of data.

5. Which model is better suited for text generation tasks?

GPT is better suited for text generation tasks due to its autoregressive architecture and ability to generate human-like text with impressive accuracy.

6. Which model is better suited for sentiment analysis or NLU?

Due to its bidirectional architecture, BERT is particularly well-suited for tasks that require a thorough understanding of the context of a sentence or phrase, such as sentiment analysis and natural language understanding (NLU).

7. What are some potential use cases for both GPT and BERT?

GPT can be used for text generation, language translation, chatbots, and more. BERT can be used for question-answering, sentiment analysis, text classification, named entity recognition, and more.